Music

03.07.2011 11:12 · Notes · music

“Strings of Fire” (also known as “Dueling Violins”) from “Lord of The Dance” is amazing.

Here I post my thoughts, QGIS tips and tricks, updates on my QGIS-related work, etc.

03.07.2011 11:12 · Notes · music

“Strings of Fire” (also known as “Dueling Violins”) from “Lord of The Dance” is amazing.

20.06.2011 08:02 · GIS · qgis, release

After several delays and release postponements, QGIS 1.7 “Wrocław” has finally been released.

There are many reasons for the delays, but there are two main ones. The first is that the developers decided to devote the last meeting to bug fixing, and the second is an update to the project’s infrastructure. The repository is now hosted on GitHub, which has led to a revision of the repository access policy. The old Trac bug tracker has also been replaced by Redmine.

This release is notable not only because the release date has been pushed back several times but also because it is the last release in the 1.x series. (at least that is the plan). The next release will be QGIS 2.0, which is expected to have many groundbreaking changes: API updates, a final transition to a new symbology, and more. A point release of QGIS 1.7.x with bug fixes but no new features will be prepared from time to time. Closer to the release of QGIS 2.0, an interim release of 1.9.x is planned for a limited number of users.

Now about QGIS 1.7.0. This release contains over 300 fixes and many improvements. You can read the detailed description of the changes in the official announcement, but I will limit myself to a short list:

gdaldem and gdaltindexMarco Hugentobler has implemented the ability to embed layers and groups from other QGIS projects into a current project (available in both QGIS and QGIS MapServer). This can help eliminate the extra work of “laying out” data in a TOC when the same data is used in multiple projects. Simply go to the “Layer → Embed layers and groups” menu, select the source project, and select the desired layers/groups.

Embedding does not involve copying data but using links that can be either absolute or relative (depending on the project settings). Accordingly, any changes made in the source project will be reflected in the target project.

If you need “real” embedding, the ImportLayersFromProject plugin (by Barry Rowlingson) written the day before comes to the rescue. The plugin also analyses the source project and allows you to transfer a layer completely from one project to another. This eliminates the dependency on the source project, which can be modified or even deleted without losing the data embedded in the target project.

As time has shown, the migration of the QGIS repository to GitHub has been successful. Now the second phase is underway: replacing Trac with Redmine.

Trac is currently available in read-only mode. Redmine is already deployed and available at https://issues.qgis.org and the OSGeo ID is used to log in. If you find any problems, please report them to https://issues.qgis.org/projects/qgis-redmine.

02.06.2011 13:24 · GIS · qgis, howto

Often, novice QGIS users who have downloaded satellite images from Earth Explorer or other sources ask themselves: “What do I do with these files, and why do I see a black rectangle or a black and white image instead of a nice color image?” The thing is that satellite images are usually distributed as separate files, each of which corresponds to a specific radiation range (channel). To get a beautiful and informative image, these channels (often called bands) should be combined, and then, depending on the task, we need to choose the right band combination.

In this post I will show you how to do this in QGIS. I will assume that QGIS is already installed and that you already have a set of rasters that make up a multi-band image, such as a Landsat scene.

Read more ››I finally completed and published my translation of the PyQGIS Developer CookBook, a reference guide for Python developers who want to use the QGIS libraries. It is available as an online version and as a PDF for offline use.

The translation is not perfect (as my English is far from perfect) and will be improved gradually. I will keep the translation in sync with updates to the original PyQGIS CookBook.

Now I can start translating another manual, especially since I already have a candidate in mind. I won’t tell you which one. Out of spite. I will only say that it is also related to GIS.

23.05.2011 13:33 · GIS · howto

MODIS Active Fire Product (MOD14) Science Processing Algorithm MOD14_SPA is an open-source implementation of the MODIS space imagery fire detection algorithm. You can find binary builds of the tool on NASA’s Direct Readout Laboratory (DRL) site, but only for some (rather old and 32-bit) Linux distributions. Fortunately, you can also download the source archive and build the tool yourself. In this post, I will show you how to do that.

Read more ››19.05.2011 16:41 · GIS · qgis, tips

Mayeul Kauffmann has done a great job comparing different ways to use OpenStreetMap (OSM) data in QGIS. He has also written a detailed guide on how to use OSM data to create beautiful, high-quality maps with routing support.

Here is a video demonstrating the use of QGIS and osm2postgresql.

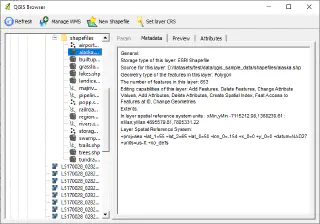

Those interested in QGIS development are probably aware that at the last developers’ meeting, among other things, Martin Dobias and Radim Blazek presented the QGIS FileBrowser application for data management.

QGIS FileBrowser is based on the QGIS libraries. It supports the same vector and raster formats as QGIS and can also work with WMS servers (information about connections is taken from QGIS settings). The functionality is still quite poor, right now you can:

The source code of the application is available in the QGIS repository, in the browser-and-customization branch.

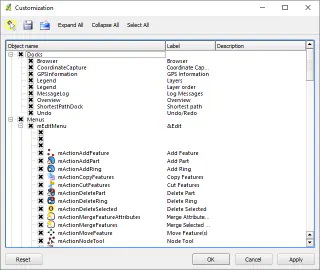

This branch also contains Martin and Radim’s work on a QGIS interface customisation tool. The new dialogue allows you to hide unnecessary widgets and save these settings to a file for use on other machines.

Not only can you hide individual buttons on toolbars and toolbars themselves, but literally anything can be customised. For example, you can remove menu items, hide individual tabs, or dialogue controls.

What has been talked about for a long time has happened. The QGIS project has officially changed its version control system: the code has been moved from SVN to GIT. The migration process is described in detail in Tim’s blog.

This is the second global migration. A few years ago, we migrated from CVS to SVN, and that was accompanied by the migration of the repository and bugtracker from SourceForge to the OSGeo infrastructure. The current migration is even more global: in addition to the migration to GIT, we have a repository migration to GitHub (more on that below), a bug tracker change (Trac will be replaced by Redmine), and we will also create a new plugin repository integrated with the bug tracker.

The new official repository is on GitHub — qgis/QGIS. As git is a distributed version control system, access to the main repository will be restricted to a few developers (i.e., many developers who previously had access will lose it). Everyone else can work in their own repositories and either submit patches (created using git format-patch) or send a pull request (if your repository is also on GitHub).

Now a bit of grumbling. The move to Git is a good step, but I personally don’t like the fact that the official repository is on GitHub. I don’t understand why the repository can’t be on the OSGeo servers. The rest of the infrastructure will be there anyway, so everything will be interconnected, and a single login will be used to access all services (bugtracker, svn, ftp…). Now it is not very convenient, and there is also a dependency on a third party.

P.S.: despite the fact that version 1.7 has already been branched, the release will be delayed until the end of the project’s infrastructure update. The release branch is accepting fixes that do not affect strings, and package maintainers can already prepare test builds and make the necessary changes.